Reading Time: 9 minutes

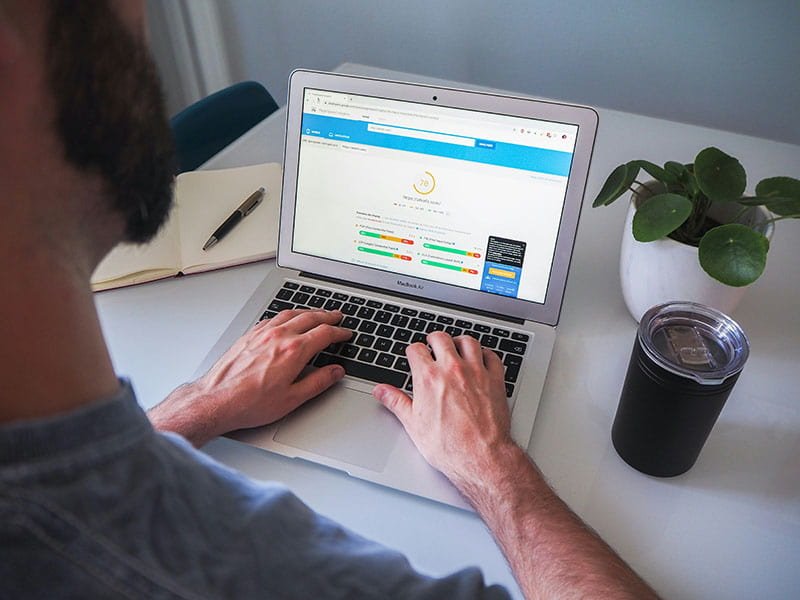

The Crucial Role of Image Compression in SEO

In the realm of search engine optimization (SEO), image compression stands out as a fundamental strategy that affects various ranking factors. When images on a website are excessively large, they can significantly hinder page load speed. Websites with slower loading times often experience higher bounce rates, as users typically expect immediate access to online content. Consequently, maintaining optimal page speed is essential not only for user experience but also for retaining visitors, which directly influences search engine rankings.

Search engines, particularly Google, prioritize sites that deliver quick and efficient user experiences. When a website takes too long to load due to oversized images, it may suffer a reduction in its visibility on search engine results pages (SERPs). Hence, compressing images before they are uploaded is vital. This practice reduces the file size without sacrificing quality, resulting in faster loading times and thus promoting a smoother navigation experience. Websites that load quickly are more likely to retain visitors, decreasing the likelihood of potential customers leaving before interacting with the content.

Moreover, image compression facilitates mobile optimization, an increasingly significant factor in SEO. With the rise of mobile browsing, it is critical for websites to adapt to various screen sizes while still maintaining performance. Compressed images ensure that mobile users have the same high-quality experience as desktop users, enhancing overall usability. Furthermore, search engines often prioritize mobile-friendly sites over others, thereby emphasizing the profound connection between image optimization and effective SEO strategies.

In conclusion, effectively compressing images is paramount for optimizing a website’s performance. By improving page load speed, enhancing user experience, and ensuring mobile compatibility, image compression plays a crucial role in a comprehensive SEO strategy aimed at boosting site visibility and engagement.

Impact of Image Compression on Site Speed

Site speed is an essential factor in both user experience and search engine optimization (SEO). Websites that load quickly are more likely to retain visitors, while slow-loading pages can result in increased bounce rates. Research indicates that a one-second delay in page load time can lead to a 7% reduction in conversions, highlighting the critical need for optimized website performance.

Large images are often a significant contributor to slow-loading pages. When high-resolution images are uploaded without compression, they can occupy a considerable amount of bandwidth, which in turn extends load times. For instance, a study by Google revealed that 53% of mobile site visitors abandon a page that takes longer than three seconds to load. This data illustrates the direct correlation between image size and site speed, with unoptimized images being a major factor behind poor performance.

Furthermore, the effects of slow site speed extend beyond user dissatisfaction; they also impact search engine rankings. Major search engines, including Google, have confirmed that site speed is one of the ranking factors in their algorithms. Pages that load faster are more likely to rank higher in search results, thereby increasing visibility and attracting more traffic. For example, e-commerce platforms, such as Amazon, have acknowledged that even a slight improvement in page load times can translate into significant increases in revenue. Their findings showed that a mere 100-millisecond improvement in load time resulted in a 1% increase in sales.

Incorporating effective image compression techniques can drastically enhance site speed. Tools and plugins specifically designed for this purpose can reduce image file sizes without significantly compromising quality. By prioritizing image optimization, website owners can improve loading times, boost user satisfaction, and ultimately enhance their SEO performance.

Understanding the Necessity of Image Compression

In the digital landscape, the necessity of image compression cannot be overstated. As websites increasingly rely on various visual content, the effect of unoptimized images becomes apparent, influencing factors such as storage space, bandwidth usage, and overall loading times. When images are not compressed, they can occupy substantial storage space on servers, leading to higher operational costs for website owners. This is particularly critical for large websites with a significant volume of media content, where every additional megabyte can accumulate into substantial expenses.

Why use Compression?

Moreover, high-resolution images that have not undergone compression can significantly slow down a website’s loading time. Site visitors tend to have little patience for slow-loading pages, often resulting in higher bounce rates. Research indicates that a delay of even one second in loading time can lead to a 7% reduction in conversions. Therefore, ensuring that images are optimized is key to maintaining a competitive edge in an industry where speed is a crucial component of user experience.

Additionally, bandwidth usage is a critical factor to consider. Uncompressed images consume more data, which can be a significant drawback for users with limited internet connections or mobile data plans. This can deter potential visitors from accessing the site, adversely affecting traffic and engagement levels. Furthermore, search engine optimization (SEO) practices heavily favor websites that load quickly. Sites with poorer performance due to heavy image files may suffer in search engine rankings, ultimately impacting visibility and organic traffic.

In summary, the importance of image compression extends beyond mere aesthetics. It is a fundamental requirement for optimizing storage space, managing bandwidth effectively, enhancing loading times, and improving SEO outcomes. As businesses strive to create efficient and user-friendly websites, investing in image compression becomes imperative for operational success and enhanced online presence.

How to Efficiently Compress Images

Compressing images efficiently is paramount for enhancing website performance and bolstering SEO strategies. Various methods can be employed for effective image compression, each catering to different needs and technical abilities. Initially, it is vital to understand the difference between lossy and lossless compression. Lossy compression reduces file size by permanently removing some data, which is suitable for photographs where slight quality loss is tolerable. In contrast, lossless compression reduces file size without any loss of quality, making it ideal for logos and graphics. Another effect way of image compress is simply reduce the image scale. For instance, your site may have a display area of 600×400 image, you could possibly be using a stock image that is 1200×1800. So it’s important to pay attention to the scaling of the image as well.

Several tools and software options are available, ranging from desktop applications to online services. For desktop users, software like Adobe Photoshop (I would not recommend using Photoshop, it embeds extra layers of hidden data into the image creating a larger image size.) and GIMP offers extensive image editing capabilities with built-in compression features. These programs provide a breadth of settings, allowing users to adjust the quality and format according to their requirements. Additionally, tools like ImageOptim and IrfanView are excellent for batch processing, enabling Front-End Designers to optimize multiple images simultaneously.

Online services such as TinyPNG, Compressor.io, and Optimizilla are convenient for those who prefer not to install additional software. These platforms support various file formats and offer straightforward upload processes, making them accessible for every user level. Utilizing these online services can considerably decrease loading times, thereby improving user experience.

Furthermore, integrating automated optimization tools into a website’s uploading workflow can streamline the compression process. Plugins like Smush for WordPress or ShortPixel can automatically compress images upon upload, ensuring that every image is optimized without the need for manual intervention. Such automation not only saves time but also guarantees that website performance remains at peak levels consistently.

Ultimately, efficiently compressing images involves selecting the right tools and methods while incorporating automation to enhance workflow. This approach ensures that websites maintain high speed and performance, contributing positively to overall SEO efforts.

300 DPI vs 72 DPI: Understanding Image Resolution

When discussing image resolution, two commonly referenced settings are 300 DPI (dots per inch) and 72 DPI. These measurements are critical in determining the quality and clarity of images according to their intended use. DPI plays a significant role in how images are rendered, influencing both visual fidelity and file size.

For online applications, 72 DPI is widely recognized as the standard resolution. This setting is typically considered sufficient for most web usage because screens display images at a lower resolution than printers. The primary advantage of utilizing 72 DPI images is that they maintain a balance between visual quality and file size, ensuring that websites load quickly. A faster-loading site enhances user experience and is positively viewed by search engines, contributing to better SEO performance.

In contrast, 300 DPI is the preferred resolution for print applications. Images at this resolution contain a greater number of pixels per inch, providing higher detail and clarity, which are crucial for printed materials such as brochures, flyers, and high-quality photographs. Using 300 DPI images in a digital context, especially on websites, can lead to unnecessarily large file sizes, which may slow down page loading times and negatively impact overall site performance.

In summary, understanding when to use 300 DPI versus 72 DPI is vital for effective image compression and optimization. For digital platforms, 72 DPI is generally recommended to boost site speed without sacrificing visual quality. Conversely, whenever high-quality prints are involved, opting for 300 DPI images is essential to meet professional standards. By aligning the resolution with the specific application, webmasters can enhance both user experience and SEO outcomes

Choosing the right image file format plays a crucial role in effective image compression and is essential for enhancing website performance and SEO. Among the most popular formats are JPG, PNG, SVG, and WEBP, each with its unique strengths and weaknesses.

The JPG format is particularly favorable for photographs and images featuring gradients. Its compression algorithm efficiently reduces file size while maintaining acceptable quality, making it a go-to choice for web optimization. However, JPG does not support transparency, which limits its application in certain design elements.

On the other hand, PNG is known for its lossless compression, which retains the original quality and supports transparent backgrounds. This makes it ideal for graphics, logos, and images requiring high detail. However, PNG files tend to be larger than JPGs, which may affect loading times if not managed properly. For SEO, using PNG for specific elements can enhance clarity but should be balanced against overall site speed.

SVG files, or Scalable Vector Graphics, are a versatile option, particularly for logos, icons, and simple illustrations. The key advantage of SVGs lies in their scalability without loss of quality, which is invaluable for responsive designs. These files are also lightweight, making them excellent for improving site speed. However, they are not suitable for complex images like photographs, which limits their use cases or another factor when not to use SVG images when they have a lot of node/points can add up to increase the file size.

Finally, WEBP is an emerging format that combines the best features of JPG and PNG, offering both lossy and lossless compression options. WEBP significantly reduces file sizes while retaining quality, making it an optimal choice for websites aiming for fast loading times. WEBP is Googles recommend and preferred file format when it comes to image compression.

In summary, understanding the distinct characteristics of each image file format is essential for effective image compression. By strategically selecting formats based on needs—balancing quality, file size, and SEO performance—webmasters can significantly enhance site speed and overall user experience.

Incorporating alt tags into images is a fundamental practice that fosters better SEO performance and enhances the overall user experience on a website. Alt text serves multiple purposes; primarily, it describes the content of images, which not only assists visually impaired users who rely on screen readers but also helps search engines in understanding the context of the visuals within the webpage. When optimized correctly, alt tags can significantly improve a site’s search engine visibility, thus contributing to better rankings in search results.

Best practices for writing effective alt text include being concise, descriptive, and relevant to the content on the page. Each alt tag should ideally be limited to around 125 characters and should not be overloaded with keywords to avoid penalties from search engines. Instead, focus on accurately portraying the image in relation to the surrounding text. For example, if an article discusses the benefits of image compression, a relevant alt tag for an accompanying graphic could be “Graph illustrating the speed benefits of image compression for web performance.” This approach ensures clarity and relevance, which are critical for both users and algorithms.

Furthermore, while integrating images into web pages and articles can enhance visual engagement, it is essential to strike a balance. Studies suggest that utilizing between three to seven images per article can maintain interest without causing distractions or hindering loading speed. Overloading a page with images can lead to slower performance, which is detrimental to SEO. Therefore, selecting high-quality images that complement the text while adhering to compression best practices will yield optimal results, ultimately leading to a more enjoyable user experience and improved site performance.

Stock Images vs. Original Photography: Making the Right Choice

When it comes to selecting imagery for a website, brands face a crucial decision between stock images and original photography. Each option carries its own set of advantages and drawbacks that can significantly impact brand perception, search engine optimization (SEO), and overall user engagement.

Stock images are often readily available, offering a vast library of visuals that can support various themes and concepts. They are typically less expensive than commissioning original photography, making them a popular choice for businesses with budget constraints. Moreover, stock images can be downloaded and integrated into a website quickly, which allows for swift implementation during time-sensitive marketing campaigns. However, the primary drawback of stock images is their lack of originality. Since many other businesses may utilize the same images, there is a risk that brands will not stand out, potentially leading to a diluted brand identity.

On the other hand, original photography offers a unique advantage in terms of originality and branding. Custom images can effectively convey a brand’s personality, values, and unique selling propositions that resonate well with target audiences. This personalized approach not only enhances engagement but can also contribute positively to SEO, as search engines favor unique content. Furthermore, businesses that use original photography can avoid copyright issues often associated with stock images, where improper licensing can lead to legal complications.

In deciding between stock images and original photography, brands should carefully consider their specific needs, budget constraints, and the desired impact on audience engagement. Ultimately, the right choice depends on a balance between cost-effectiveness and the ability to craft a distinctive brand narrative. Leveraging custom images may require a higher initial investment, but the potential benefits in terms of originality and SEO could yield long-term rewards for a brand’s identity and online performance.

In Summary Of Image Compression

In conclusion, utilizing image compression and image scaling can potentially creating a smaller site size and faster load times which then favors Google’s SEO restrictions. Just imagine having a site with icons, logo, charts, infographics, background images, and supporting paragraph images all add up and can really bog down a site loading time. Other things to mention is the use of lazy loading and image spites will also have a positive impact on SEO and loading times.

Thanks for reading and happy posting! 👍

Related Articles

Stay Updated With ALL New SEO Strategies

Subscribe Below, and become informed when new articles arrive.